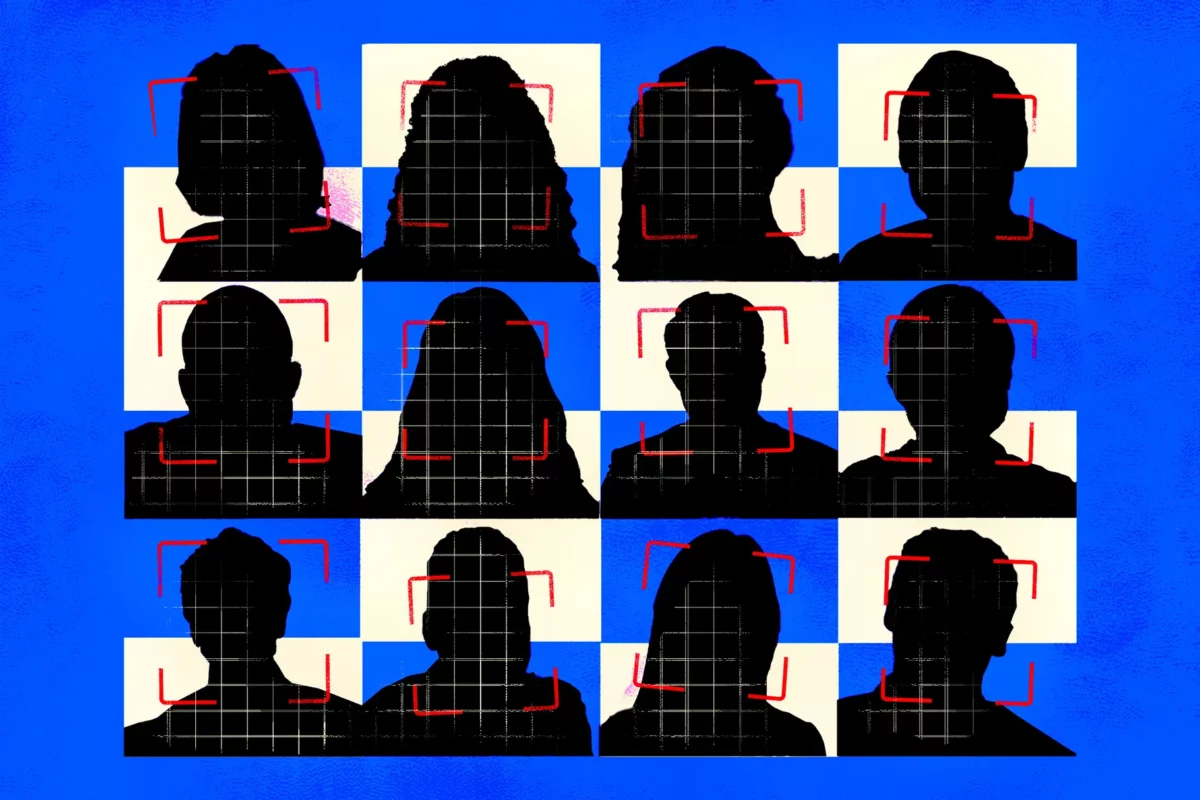

Automated “liveness tests” used by banks and other institutions to help verify users’ identity can be easily fooled by deepfakes, demonstrates a new report.

Security firm Sensity, which specializes in spotting attacks using AI-generated faces, probed the vulnerability of identity tests provided by 10 top vendors. Sensity used deepfakes to copy a target face onto an ID card to be scanned and then copied that same face onto a video stream of a would-be attacker to pass vendors’ liveness tests.

Liveness tests generally ask someone to look into a camera on their phone or laptop, sometimes turning their head or smiling, to prove both that they’re a real person and to compare their appearance to their ID using facial recognition. In the financial world, such checks are often known as KYC, or “know your customer” tests, and can form part of a wider verification process that includes document and bill checks.

“We tested 10 solutions and we found that nine of them were extremely vulnerable to deepfake attacks,” Sensity’s chief operating officer, Francesco Cavalli, told The Verge.

“There’s a new generation of AI power that can pose serious threats to companies,” says Cavalli. “Imagine what you can do with fake accounts created with these techniques. And no one can detect them.”

Sensity shared the identity of the enterprise vendors it tested with The Verge, but it requested that the names not be published for legal reasons. Cavalli says Sensity signed non-disclosure agreements with some of the vendors and, in other cases, fears it may have violated companies’ terms of service by testing their software in this way.

Cavalli also says he was disappointed by the reaction from vendors, who did not seem to consider the attacks significant. “We told them ‘look you’re vulnerable to this kind of attack,’ and they said ‘we do not care,’” he says. “We decided to publish it because we think, at a corporate level and in general, the public should be aware of these threats.”

The vendors Sensity tested sell these liveness checks to a range of clients, including banks, dating apps, and cryptocurrency startups. One vendor was even used to verify the identity of voters in a recent national election in Africa. (Though there’s no suggestion from Sensity’s report that this process was compromised by deepfakes.)

Cavalli says such deepfake identity spoofs are primarily a danger to the banking system where they can be used to facilitate fraud. “I can create an account; I can move illegal money into digital bank accounts of crypto wallets,” says Cavalli. “Or maybe I can ask for a mortgage because today online lending companies are competing with one another to issue loans as fast as possible.”

This is not the first time deepfakes have been identified as a danger to facial recognition systems. They’re primarily a threat when the attacker can hijack the video feed from a phone or camera, a relatively simple task. However, facial recognition systems that use depth sensors — like Apple’s Face ID — cannot be fooled by these sorts of attacks, as they verify identity not only based on visual appearance but also the physical shape of a person’s face.